The Sophistication Shift: AI Arms Race Redefines Digital Fraud

- 180% surge in high-quality, AI-augmented fraud attacks year-over-year

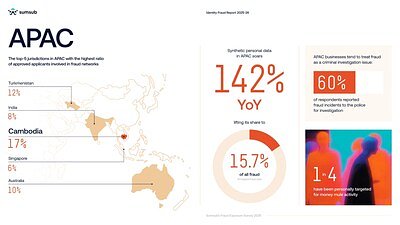

- 142% increase in synthetic personal data fraud in APAC, now accounting for 15.7% of all fraud attempts in the region

- 69% of consumers view AI-powered fraud as a greater threat than traditional identity theft

Experts warn that the digital fraud landscape is rapidly evolving due to AI-driven sophistication, requiring businesses to adopt dynamic, multi-layered security frameworks to combat increasingly convincing and automated attacks.

The Sophistication Shift: AI Arms Race Redefines Digital Fraud

SINGAPORE – November 25, 2025 – The global war on digital fraud has entered a new, more perilous phase. While overall fraud volumes are beginning to stabilize, a "Sophistication Shift" is underway, driven by an industrialized ecosystem of criminals wielding generative AI to launch hyper-realistic and devastatingly effective attacks. This stark warning comes from the fifth annual Identity Fraud Report by Sumsub, a global leader in verification and anti-fraud solutions, which found that high-quality, AI-augmented attacks have surged a staggering 180% year-over-year.

The findings, corroborated by data across the cybersecurity industry, signal a fundamental change in the nature of digital crime. The era of high-volume, low-effort scams is giving way to a more professionalized and technologically advanced threat landscape. As businesses and consumers grapple with this new reality, the battle is no longer just about spotting a forged document, but about detecting a perfectly crafted synthetic identity brought to life by artificial intelligence.

The Industrialization of Deception

At the heart of this transformation is the widespread availability of powerful generative AI tools. What once required significant technical skill and resources—creating a convincing fake identity or deepfake video—can now be accomplished with alarming ease. This has fueled a booming Fraud-as-a-Service (FaaS) market, where platforms like "WormGPT" and "FraudGPT" offer AI-powered tools to automate the creation of malicious code and highly personalized phishing campaigns that can bypass traditional security filters.

According to the new report, which analyzed millions of verification checks, this shift is moving fraud from a game of quantity to one of quality. The data is echoed by other industry leaders. A recent study from Entrust revealed that deepfakes now account for one in five biometric fraud attempts, while INTERPOL has warned of "Phishing 3.0," where AI enables criminals to craft flawless, context-aware scams that transcend language barriers. This professionalization means that attacks are not only more convincing but also more targeted and damaging.

The implications for markets are profound. As consumer trust erodes—a 2025 Jumio study found 69% of consumers now see AI-powered fraud as a greater threat than traditional identity theft—the very foundation of digital commerce is at risk. The challenge for businesses is no longer simply compliance, but survival in an environment where deception is becoming indistinguishable from reality.

APAC: A Proving Ground for Next-Gen Fraud

Nowhere is this new reality more apparent than in the Asia-Pacific (APAC) region. The area has become both a prime target and a crucial testing ground for these sophisticated attacks. Sumsub's report highlights a shocking 142% year-over-year surge in fraud involving synthetic personal data in APAC, a category that now accounts for 15.7% of all fraud attempts in the region.

"The fraud landscape in APAC has changed faster in the past twelve months than in the previous five years combined," said Penny Chai, Vice President, APAC at Sumsub. "In 2025, we saw fraud rates decline across mature economies, including Singapore, Hong Kong, and South Korea — yet deepfakes and synthetic identities are rising faster than anywhere else in the world. This shift indicates that the region's success in combating basic scams has prompted attackers to adapt their tactics."

The data paints a picture of a deeply fragmented and volatile landscape. While Singapore recorded a 12% drop in its overall fraud rate, it simultaneously experienced a 158% surge in deepfake incidents. In one high-profile case earlier this year, a finance director was tricked by a deepfake video call into transferring nearly half a million dollars. Other nations are seeing explosive growth, with the Maldives reporting an astonishing 2100% year-over-year increase in deepfakes, followed by Malaysia (408%) and Thailand (199%).

This technological escalation is coupled with a disturbing human element. The region is home to industrial-scale "scam centers," particularly in Southeast Asia, where transnational criminal networks force trafficked individuals to perpetrate fraud. Furthermore, the report highlights that one in four individuals in APAC has been targeted for recruitment as a money mule, one of the highest rates globally. This underscores a significant shift in how fraud is perceived, with 60% of APAC companies now reporting incidents to the police, treating it as a criminal enterprise rather than a mere compliance issue.

The Invisible Battlefield: Telemetry and AI Agents

As verification systems race to catch up, fraudsters are already moving to the next frontier. The most advanced attacks are becoming invisible, targeting the underlying infrastructure of the verification process itself. A key emerging tactic identified in the report is "telemetry tampering." Rather than forging a document or creating a deepfake, criminals manipulate the background data signals—such as device fingerprints, network information, and behavioral biometrics—to make a fraudulent application appear legitimate. By attacking the context instead of the content, they can bypass systems focused solely on document and facial authenticity.

Even more concerning is the rise of "AI fraud agents." These are not simple bots but autonomous systems that leverage generative AI to execute entire fraud campaigns end-to-end. An AI agent can create a synthetic identity, generate a hyper-realistic deepfake video to pass a liveness check, mimic human-like navigation on a website, and adapt its tactics in real-time to overcome security measures. What began as isolated experiments in 2025 is expected to become a major wave of attacks by 2026.

This development signals the beginning of a true AI arms race. As criminals deploy offensive AI agents, security firms are developing their own defensive AI agents to autonomously detect and block these complex, high-speed attacks. The battlefield is shifting from a human-to-machine interaction to a machine-to-machine conflict fought in milliseconds.

The Strategic Imperative: Adapting to Dynamic Risk

For business leaders, investors, and healthcare organizations handling sensitive patient data, these trends represent a critical inflection point. The era of static, one-time security checks at the point of onboarding is over. Such measures are increasingly ineffective against attackers who can manipulate telemetry data and deploy autonomous AI agents.

The strategic imperative is to move towards a dynamic, multi-layered security framework. This involves adopting solutions that continuously assess risk throughout the user lifecycle, not just at the front door. Advanced systems must analyze a wide array of signals in real-time, including device and network intelligence, behavioral patterns, and other contextual data, to distinguish between legitimate users and sophisticated non-human or manipulated attacks.

As APAC's digital economy continues to outpace global growth, the region's struggle offers a glimpse into the future for all markets. Securing this future requires a fundamental shift in mindset: from stopping individual fraudulent transactions to building an evolving, adaptive ecosystem of trust. In this new era of industrialized, AI-powered deception, the ability to learn and adapt in real time is no longer just a competitive advantage—it is the only path to survival.