Grid-Safe AI: A New Power System Aims to Tame AI's Energy Hunger

- AI data center power demands can swing from 10% to over 180% of nominal capacity in milliseconds.

- A single modern AI chip consumes over 1,200 watts.

- The AI UPS system is being tested at a 20-megawatt scale at the National Laboratory of the Rockies.

Experts agree that managing AI's volatile power demands is critical to grid stability and data center reliability, with innovative solutions like ON.energy's AI UPS offering a promising path forward.

Grid-Safe AI: A New Power System Aims to Tame AI's Energy Hunger

BOULDER, CO – January 15, 2026 – In the high-stakes race to build the future of artificial intelligence, a silent but critical bottleneck has emerged: power. As tech giants build sprawling AI campuses with energy appetites scaling toward the gigawatt level, the very electrical grids they depend on are straining under the pressure. Now, a new collaboration in the foothills of the Rocky Mountains aims to solve this burgeoning crisis.

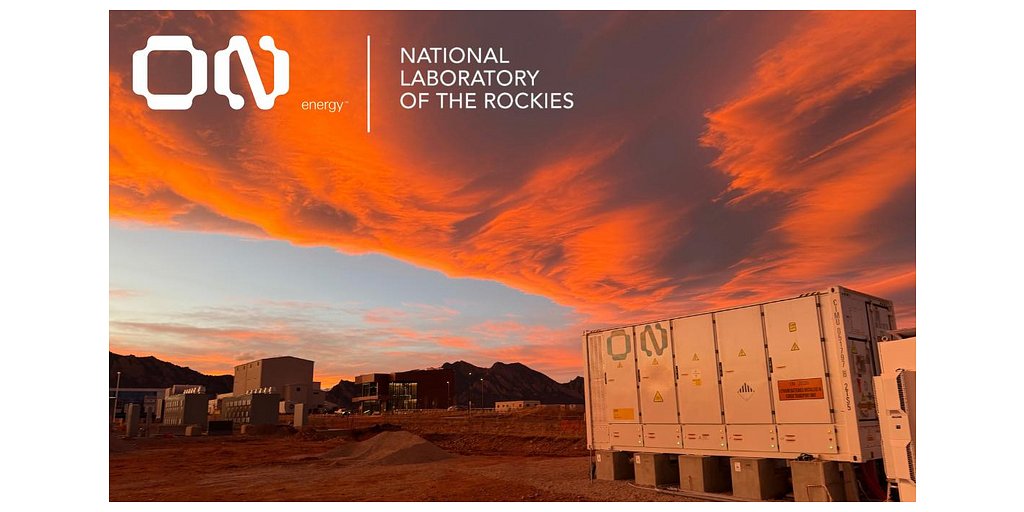

Energy infrastructure company ON.energy has announced the deployment of its "AI UPS™," a first-of-its-kind medium-voltage uninterruptible power system, at the National Laboratory of the Rockies (NLR). Located at the lab's advanced Flatirons Campus, the system will undergo rigorous testing to prove it can act as a crucial shock absorber between volatile AI data centers and the public power grid, potentially unlocking the next phase of AI expansion.

AI's Voracious and Volatile Appetite

The explosive growth of AI has created a power paradox. The same advanced graphics processing units (GPUs) that enable breakthroughs in machine learning are voracious and erratic energy consumers. Unlike traditional computing workloads, which have relatively stable power draws, AI training and inference processes cause massive, near-instantaneous power spikes. Industry research shows that power demands on a single data center rack can swing wildly from 10% to over 180% of its nominal capacity in milliseconds.

This volatility poses a severe threat to both the data centers themselves and the grid infrastructure that supports them. "Customers across the sector are searching for solutions as conventional UPS electrical architectures are unable to meet the requirements seen in today’s AI compute environments," ON.energy noted in its announcement.

The scale of the problem is staggering. A single modern AI chip can consume over 1,200 watts, and data center operators are now designing racks that draw over 100 kilowatts—a density unimaginable just a few years ago. According to U.S. Department of Energy analysis, the rapid growth of data centers, driven primarily by AI, is a major factor in projections that see national electricity demand accelerating significantly over the next decade. This has led to multi-year delays for new data center grid connections and raised serious concerns among regulators about grid reliability and the potential for widespread disturbances.

A New Architecture for Power Resilience

ON.energy's AI UPS is engineered specifically to address this challenge. By moving from a traditional low-voltage architecture to a medium-voltage design, the system is built to handle the immense power loads of hyperscale AI facilities. Its core function is to create a dynamic buffer that isolates the grid from the data center's chaotic power demands.

The system is designed to absorb the steep GPU power transients and rapid load swings, providing a clean, stable stream of power to the sensitive computing hardware. This not only protects the expensive AI chips from damaging power fluctuations but also ensures the data center can "ride through" grid-side disturbances, a critical requirement for maintaining uptime and data integrity.

“Customers want to see how a medium-voltage UPS between grid and compute behaves under real grid disturbances and load volatility for their unique GPU-profile,” said Dax Kepshire, President of ON.energy’s Data Center division. “Our deployment at NLR’s Flatirons Campus lets us provide extensive performance validation of our AI UPS meeting each customer’s unique requirements in a controlled environment.”

A key differentiator for the technology is its grid-interactive capability. While conventional UPS systems are often a sunk cost, this new architecture is designed to potentially participate in energy markets. This could allow data center operators to generate revenue by providing grid stabilization services, turning a critical piece of infrastructure into a financial asset.

Validation at a World-Class Facility

The partnership with the National Laboratory of the Rockies—slated to be the new name for the National Renewable Energy Laboratory (NREL) in late 2025—is central to validating these claims. The lab's Flatirons Campus is home to the Advanced Research on Integrated Energy Systems (ARIES) platform, a sophisticated environment capable of simulating complex grid scenarios at a 20-megawatt scale.

Under their agreement, NLR researchers will subject the AI UPS to a battery of tests. They will simulate the erratic power profile of a full-scale AI data center while also recreating a wide range of grid events, from voltage sags to complete outages. This will provide verifiable, third-party data on how the system stabilizes loads, protects equipment, and supports the broader electrical grid.

The rapid timeline of the project underscores the urgency of the issue. “With ON.energy the teams have gone from first contact through contracting and construction in less than six months here at Flatirons Campus,” stated Andrew Hudgins, the ARIES Laboratory Program Manager. “NLR is committed to advancing technologies that improve the flexibility and reliability of the nation’s power systems and at the speed this sector demands.”

This controlled testing environment is also proving to be a major draw for the industry's biggest players. The press release confirms that the installation is already attracting significant interest from multiple hyperscalers, who can use the platform to run their own validation scenarios before committing to large-scale deployments in their own facilities.

The Race to Power the Future of Compute

ON.energy is entering a competitive and rapidly evolving market. Established electrical infrastructure giants like ABB, Schneider Electric, and Vertiv are also racing to develop next-generation power solutions for the AI boom. Many are similarly focusing on medium-voltage UPS systems and forming strategic alliances with chipmaker NVIDIA to create reference designs for AI-ready data centers.

ABB, for example, has promoted its own medium-voltage UPS, the HiPerGuard, and is working with data center builder Applied Digital on a new campus that will be used by a major US-based hyperscaler. Schneider Electric has also partnered with NVIDIA, highlighting "software-defined power" and compact, high-density systems to manage energy flow efficiently.

The common thread is a recognition that the old model of data center power infrastructure is no longer sufficient. The future of AI is inextricably linked to the development of a more intelligent, resilient, and interactive energy backbone. The successful validation of technologies like ON.energy's AI UPS at facilities like NLR could be a pivotal step in ensuring that the digital revolution does not outpace the physical infrastructure required to sustain it. As the demand for compute continues its exponential climb, the ability to manage power at an unprecedented scale will define the leaders in the next era of technology.

📝 This article is still being updated

Are you a relevant expert who could contribute your opinion or insights to this article? We'd love to hear from you. We will give you full credit for your contribution.

Contribute Your Expertise →