AI Model Achieves Near-Perfect Accuracy in Skin Cancer Detection by Combining Images & Patient Data

Researchers have developed a new AI model that significantly improves melanoma detection by integrating dermoscopic images with crucial patient data – offering a potential leap forward in early diagnosis and accessibility.

AI at Work: Beyond the Image: How Patient Data is Revolutionizing Skin Cancer Detection

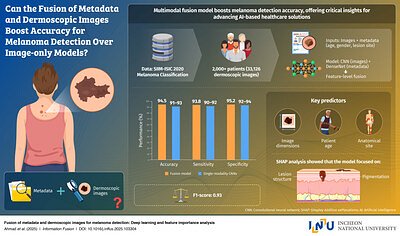

SEOUL, SOUTH KOREA – November 17, 2025 – A new artificial intelligence model developed by researchers at Incheon National University (INU) is demonstrating remarkable accuracy in melanoma detection, achieving a 94.5% success rate by combining dermoscopic images with patient metadata. This breakthrough, published in Information Fusion, signals a potential paradigm shift in skin cancer diagnostics, moving beyond image-only analysis to embrace a more holistic and data-driven approach.

The Limitations of Visual Diagnosis

For decades, dermatologists have relied heavily on visual inspection of skin lesions, often aided by dermoscopy – a magnified view of the skin. While skilled practitioners can achieve high accuracy, the process remains subjective and prone to errors, particularly in the early stages of melanoma when lesions can be subtle. “The visual assessment of skin lesions can be tricky,” says one expert. “Subtle variations, early-stage lesions, and differing presentations across skin types can all contribute to misdiagnosis.”

Existing AI-powered skin cancer detection tools have largely focused on image analysis, training algorithms to identify patterns and characteristics indicative of malignancy. However, these systems often overlook critical contextual factors. “Relying solely on images is like looking at a single piece of a puzzle,” explains Professor Gwangill Jeon of INU’s Lab of Advanced Imaging Tech. “You’re missing important clues that could significantly impact the diagnosis.”

A Multimodal Approach: Integrating Patient Data

The INU team’s innovation lies in its “multimodal fusion framework,” which integrates dermoscopic images with patient metadata – age, gender, and lesion location. This approach acknowledges that a patient’s individual characteristics and the specific location of a lesion can provide crucial diagnostic information. The system uses separate neural networks to process each data type – images and metadata – before fusing the information at a deeper level. This allows the AI to identify subtle patterns and correlations that might be missed by traditional image-only analysis.

“We discovered that lesion size, patient age, and anatomical site were key factors contributing to accurate detection,” Professor Jeon notes. “By incorporating these variables into the model, we were able to significantly improve its performance.”

Beyond Accuracy: The Rise of Personalized Diagnostics

The implications of this research extend beyond simply achieving a higher accuracy rate. By leveraging patient-specific data, the INU model paves the way for more personalized diagnostics. “This isn’t about replacing dermatologists,” says another expert. “It's about providing them with a powerful tool that can augment their expertise and help them make more informed decisions.”

The potential applications of this technology are vast. One promising avenue is telemedicine, where the AI model could be integrated into smartphone apps or online platforms, allowing patients to screen for skin cancer from the comfort of their homes. This could be particularly beneficial in underserved communities with limited access to dermatologists. Another application is in clinical settings, where the AI model could serve as a second opinion for dermatologists, helping to reduce misdiagnosis and improve patient outcomes. “Think of it as an AI assistant that can flag potentially suspicious lesions and provide additional insights,” says one source.

Several companies are already developing AI-powered skin cancer detection tools, but many rely solely on image analysis. DermaSensor, for instance, offers an FDA-cleared handheld device that uses optical spectroscopy and AI to analyze lesions. SkinVision offers an app-based solution available in Europe and Australia. However, these systems often lack the sophisticated multimodal approach of the INU model. “The INU team’s integration of patient metadata is a significant differentiator,” says an expert. “It’s a step towards more comprehensive and accurate diagnostics.”

Funding and Future Directions

Professor Jeon’s research is supported by several grants from the National Research Foundation (NRF) and the Ministry of Health and Welfare, reflecting South Korea’s strong commitment to advancing AI in healthcare. His lab is actively involved in research related to multimodal medical AI and the development of foundation models for healthcare applications. The team is also exploring ways to address potential biases in the model and ensure its generalizability across diverse populations and skin types. Future research will focus on expanding the range of metadata integrated into the model – including patient history, lifestyle factors, and genetic predispositions – to further improve its accuracy and personalization capabilities. “We believe that this is just the beginning,” says Professor Jeon. “The potential of AI to revolutionize skin cancer diagnostics is immense.”